Thought an art thread for AI generated images would be fun. There is a few different AIs now and they are really good.

/cyb/ - Cyberpunk Fiction and Fact

Cyberpunk is the idea that technology will condemn us to a future of totalitarian nightmares here you can discuss recent events and how technology has been used to facilitate greater control by the elites, or works of fiction

416 replies and 413 files omitted.

>>2162

I'll need the prompt and model used for those pics. If I just said "(My Little Pony) garden statue" with the default model that came with the AI when I downloaded it I doubt I'll get good results, but I'll give it a go.

By the way, what does "Tiling" do? Whenever I check that box it just makes the output look inferior. Is there some purpose to it I'm missing?

I'll need the prompt and model used for those pics. If I just said "(My Little Pony) garden statue" with the default model that came with the AI when I downloaded it I doubt I'll get good results, but I'll give it a go.

By the way, what does "Tiling" do? Whenever I check that box it just makes the output look inferior. Is there some purpose to it I'm missing?

>>2163

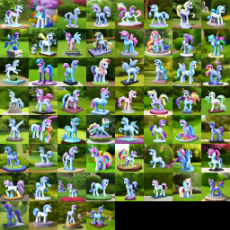

The one I had limited success with (but takes 2-3 minutes per image so I was not able to do to much) was:

>pony statue, majestic, alicorn, 4k hi-res, detailed, granite, cracked surface, weathered

>Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 174254891, Size: 512x512

The one I had limited success with (but takes 2-3 minutes per image so I was not able to do to much) was:

>pony statue, majestic, alicorn, 4k hi-res, detailed, granite, cracked surface, weathered

>Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 174254891, Size: 512x512

>>2164

2-3 minutes per image? That must suck. How many images can you generate at once before your PC runs out of memory?

One time I left my room with an autoclicker generating more sets of images every minute. By the time I got back, I had a few thousand.

2-3 minutes per image? That must suck. How many images can you generate at once before your PC runs out of memory?

One time I left my room with an autoclicker generating more sets of images every minute. By the time I got back, I had a few thousand.

>>2166

>How many images can you generate at once before your PC runs out of memory?

I can only run one at a time, and at times have to close other apps that uses memory in order for it to run.

Are you using the Stable Diffusion Model or Waifu model instead of the Pony model? The Pony Model is good and you should try it

https://huggingface.co/AstraliteHeart/pony-diffusion

https://mega.nz/file/ZT1xEKgC#Xxir5udMmU_mKaRZAbBkF247Yk7DqCr01V0pDzSlYI0

>How many images can you generate at once before your PC runs out of memory?

I can only run one at a time, and at times have to close other apps that uses memory in order for it to run.

Are you using the Stable Diffusion Model or Waifu model instead of the Pony model? The Pony Model is good and you should try it

https://huggingface.co/AstraliteHeart/pony-diffusion

https://mega.nz/file/ZT1xEKgC#Xxir5udMmU_mKaRZAbBkF247Yk7DqCr01V0pDzSlYI0

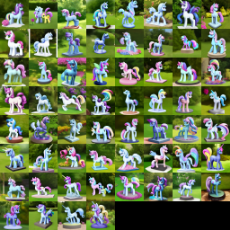

>>2167

Pony model loaded.

Here are some new AI generated pony statues, image sets 3 and 4 were made with the seed you posted instead of a random seed.

Had to split them up because trying to post the next 4 intact broke some kind of limit. "Request Entity too large".

Pony model loaded.

Here are some new AI generated pony statues, image sets 3 and 4 were made with the seed you posted instead of a random seed.

Had to split them up because trying to post the next 4 intact broke some kind of limit. "Request Entity too large".

Anonymous

No.2173

>>2172

Strange that the first image in the set using same seed and prompt didn't yield the same image as I got as it should have done that. Subsequent images when given a seed should give previous_seed+1 when running batches.

Strange that the first image in the set using same seed and prompt didn't yield the same image as I got as it should have done that. Subsequent images when given a seed should give previous_seed+1 when running batches.

>>2176

Strange. For reference the arguments I use to start Stable Diffusion is:

>--ckpt pony_sfw_80k_safe_and_suggestive_500rating_plus-pruned.ckpt --lowvram --opt-split-attention

Other than that I have the standard settings (no scripts or face fixes or anything) and standard txt2img

Strange. For reference the arguments I use to start Stable Diffusion is:

>--ckpt pony_sfw_80k_safe_and_suggestive_500rating_plus-pruned.ckpt --lowvram --opt-split-attention

Other than that I have the standard settings (no scripts or face fixes or anything) and standard txt2img

>>2177

>lowvram --opt-split-attention

I was using those exact settings except for that part. They just reduce the load on your computer and have no effect on the resulting image, right?

>lowvram --opt-split-attention

I was using those exact settings except for that part. They just reduce the load on your computer and have no effect on the resulting image, right?

>>2178

They should just reduce the memory used and not change what is produced. Just posted full list of arguments in case I'm wrong on that. It was just strange that you didn't get the same image using same prompt and seed.

They should just reduce the memory used and not change what is produced. Just posted full list of arguments in case I'm wrong on that. It was just strange that you didn't get the same image using same prompt and seed.

>>2179

>It was just strange that you didn't get the same image using same prompt and seed.

You see, these AI's no longer "mash" images together with the hope of producing a coherent pic.

They basically emulate human creativity.

Artists need external inputs as well. They consciously and unconsciously absorb bits and pieces from every other artwork they may have seen before. Sometimes it may even be apparently unrelated life experiences.

Regardless, when artists draw inspiration from other art sources. They are pretty much doing the same thing that AI's do.

It's impossible to trace back the source pics. What AI's make is about as original as what any human could've conceived.

It makes sense you don't always get the same results, even when all things are equal.

>It was just strange that you didn't get the same image using same prompt and seed.

You see, these AI's no longer "mash" images together with the hope of producing a coherent pic.

They basically emulate human creativity.

Artists need external inputs as well. They consciously and unconsciously absorb bits and pieces from every other artwork they may have seen before. Sometimes it may even be apparently unrelated life experiences.

Regardless, when artists draw inspiration from other art sources. They are pretty much doing the same thing that AI's do.

It's impossible to trace back the source pics. What AI's make is about as original as what any human could've conceived.

It makes sense you don't always get the same results, even when all things are equal.

Anonymous

No.2183

>>2182

>It makes sense you don't always get the same results, even when all things are equal.

Running same seed and prompt I get same image every time. Also running others prompt and seeds (I seen people post) I get same image they get when using the same model they used.

>It makes sense you don't always get the same results, even when all things are equal.

Running same seed and prompt I get same image every time. Also running others prompt and seeds (I seen people post) I get same image they get when using the same model they used.

Anonymous

No.2184

>>2182

I don't think the AI is updating its own model with data from the pictures it generates.

I hope it isn't. I don't want my AI to give itself dementia by using its garbled output as new input.

By now its garbled output probably outnumbers the number of images it was trained on.

I don't think the AI is updating its own model with data from the pictures it generates.

I hope it isn't. I don't want my AI to give itself dementia by using its garbled output as new input.

By now its garbled output probably outnumbers the number of images it was trained on.

Anonymous

No.2190

1667953434.mp4 (4.9 MB, Resolution:576x1024 Length:00:00:41, video_2022-11-07_12-36-29.mp4) [play once] [loop]

Anonymous

No.2193

Does anyone have any good images? I tried doing a cursory search on ponerpics and the first few pages were horrid dogshit.

The era of abstract merchants has reached a new golden age.

Merchants are being produced faster than the ADL could ever hope to flag them.

Merchants are being produced faster than the ADL could ever hope to flag them.

Anonymous

No.2236

Anonymous

No.2237

>>2235

Idk. I got them from Discord.

If you find out any methods, do share. I want to mass produce merchants.

Idk. I got them from Discord.

If you find out any methods, do share. I want to mass produce merchants.

Anonymous

No.2245

1668994325_1.png (722.5 KB, 1280x768, 00036-1416231330-green_eyes_s.png)

1668994325_2.png (281.3 KB, 640x384, 00033-1191004003-green_eyes_s.png)

Anonymous

No.2246

1668994653_1.png (1.1 MB, 1280x768, 00050-4283156501-watercolor.png)

1668994653_2.png (334.4 KB, 512x512, 00008-3025926800-safe_hioshir.png)

1668994653_3.png (319.5 KB, 512x512, 00019-2923236971-t-hoodie_AND_.png)

1668994653_4.png (289.2 KB, 512x512, 00002-3251689543-by_lumineko_A.png)

Pixiv has lost its collective mind recently (more than before).

It's impossible to use the site without being recommended pics of [i]pregnant toddlers!

It's impossible to use the site without being recommended pics of [i]pregnant toddlers!

Anonymous

No.2258

1669184042.png (11.3 MB, 3072x2560, Twilight Sparkle supposedly.png)

I got the new Everything V3 model.

It seems more varied than the previous smaller Waifu model I was using.

But it also loves to wash out the colours of anything I make with it. So I often have to saturate this desaturated art output until it looks right.

It seems more varied than the previous smaller Waifu model I was using.

But it also loves to wash out the colours of anything I make with it. So I often have to saturate this desaturated art output until it looks right.

"Twilight Sparkle"

>>2273

Nice. Not knowing prompt but adding "(unicorn)" to prompt and "(text), devil" to negative prompt might fix some of the devil horns and and text. Love how AI art is evolving with better and better models.

Nice. Not knowing prompt but adding "(unicorn)" to prompt and "(text), devil" to negative prompt might fix some of the devil horns and and text. Love how AI art is evolving with better and better models.

Anonymous

No.2276

>>2274

Speaking of AI evolution how do I update my installation of Stable Diffusion? I heard the new version has no limit on text input and is better at drawing hands.

Speaking of AI evolution how do I update my installation of Stable Diffusion? I heard the new version has no limit on text input and is better at drawing hands.

Anonymous

No.2278

>>2277

The way I do it is that I used git to get the initial code and run an git update in the bat file used to start SD.

So initially do an

>git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui

Then in bat file I do

>...

>set COMMANDLINE_ARGS=--ckpt pony_sfw_80k_safe_and_suggestive_500rating_plus-pruned.ckpt --lowvram --opt-split-attention

>

>cd stable-diffusion-webui

>git pull

>call webui.bat

>cd ..

[Read more] The way I do it is that I used git to get the initial code and run an git update in the bat file used to start SD.

So initially do an

>git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui

Then in bat file I do

>...

>set COMMANDLINE_ARGS=--ckpt pony_sfw_80k_safe_and_suggestive_500rating_plus-pruned.ckpt --lowvram --opt-split-attention

>

>cd stable-diffusion-webui

>git pull

>call webui.bat

>cd ..

Wtf is he doing to edit the AI generated art and get new ones like that? [YouTube] Waifu Diffusion AI speed paint![]()

>>2281

I assumed he did, but looked at description and he is using the Krita plugin for Stable Diffusion that runs an img2img with prompt. It can take basic input and you have to tell it what it should draw and weight how much it should resemble the input image. I am not fully sure how the weights should be but I think the Krita plugin is fairly easy to use.

First get Krita https://krita.org/

Then install the Krita plugin https://github.com/sddebz/stable-diffusion-krita-plugin

You can do the same in Stable Diffusion webui directly by going to the img2img tab.

I assumed he did, but looked at description and he is using the Krita plugin for Stable Diffusion that runs an img2img with prompt. It can take basic input and you have to tell it what it should draw and weight how much it should resemble the input image. I am not fully sure how the weights should be but I think the Krita plugin is fairly easy to use.

First get Krita https://krita.org/

Then install the Krita plugin https://github.com/sddebz/stable-diffusion-krita-plugin

You can do the same in Stable Diffusion webui directly by going to the img2img tab.

>>2283

Have you tried using that setting where your generator makes the image tiny and then scales it up?

Have you tried using that setting where your generator makes the image tiny and then scales it up?

>>2287

I have not tried upscaling or any of the fancy options as the biggest I can generate is 512x512 and even that is pushing my card to the limits. And it takes a couple of minutes to generate an image. So experimenting with settings and what it does is an exercise in patience and remembering what I did half an hour ago and if my changes actually had an impact.

I have not tried upscaling or any of the fancy options as the biggest I can generate is 512x512 and even that is pushing my card to the limits. And it takes a couple of minutes to generate an image. So experimenting with settings and what it does is an exercise in patience and remembering what I did half an hour ago and if my changes actually had an impact.

>>2288

Damn, that sucks. Back when I had to make do with a piece of shit laptop that would take multiple minutes to boot up and do basic tasks like open the file explorer or open a webpage, I ran Fallout New Vegas at barely 10 frames a second even with the lowest graphics settings and as few mods as possible. The game was borderline unplayable especially when combat started so I had to rely on companions basically doing all combat for me. The lag didn't fuck their attacks up as much. Even the fucking word processor lagged with that thing. A word processor!

Damn, that sucks. Back when I had to make do with a piece of shit laptop that would take multiple minutes to boot up and do basic tasks like open the file explorer or open a webpage, I ran Fallout New Vegas at barely 10 frames a second even with the lowest graphics settings and as few mods as possible. The game was borderline unplayable especially when combat started so I had to rely on companions basically doing all combat for me. The lag didn't fuck their attacks up as much. Even the fucking word processor lagged with that thing. A word processor!

[Reply] [Last 50 Posts] [Last 100 Posts] [Last 200 Posts]

You are viewing older replies. Click [View All] or [Last n Posts] to vew latest posts.

Clicking update will load all posts posted after last post on this page.

Post pagination: [Prev] [1-50] [51-100] [101-150] [151-200] [201-250] [251-300] [301-350] [351-400] [401-450] [451-466] [Next] [Live (last 50 replies)]

466 replies | 449 files | 74 UUIDs | Page 4

[Add to Thread Watcher]

Ex: Type :littlepip: to add Littlepip

Ex: Type :littlepip: to add Littlepip  Ex: Type :eqg-rarity: to add EqG Rarity

Ex: Type :eqg-rarity: to add EqG Rarity