This might be interesting to some of you. Here are leaked documents from Facebook showing how their moderators are trained to define and look for "hate speech." Interestingly, they say that white nationalism is acceptable.

>According to leaked internal documents obtained by Motherboard, the 2017 Charlottesville protests were a moment of intense introspective for Facebook, which scrambled to redefine what they consider “hate speech” and to educate content moderators about American white nationalists.

>A log of updates to hate speech policy documents show some of the new phrases and sentiments that were defined as hate speech following the Charlottesville protest. In November 2017, trainers added the comparison of Mexican people to worms as an example of hate speech, in December they added the comparison of Muslims and pigs, and in February, trainers added that referring to transgender people as “it” rather than their preferred pronouns was hate speech.

>the slides stated that the company does not “allow praise, support, or representation of white supremacy as an ideology” but does allow positions on white nationalism and separatism to be praised or discussed.

>Facebook notes that nationalism as an ideology is not specifically racist, stating that it is an “extreme right movement and ideology, but it doesn’t seem to be always associated with racism (at least not explicitly).” Facebook then notes that “In fact, some white nationalists carefully avoid the term supremacy because it has negative connotations.”

>Another slide asks: “Can you say you’re a racist on Facebook?” Facebook’s official response to this is “No. By definition, as a racist, you hate on at least one of our characteristics that are protected.”

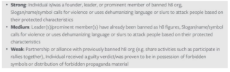

>High profile users, individuals, and organizations are classified as hate groups based on “strong, medium, and weak signals,” according to another slide. A strong signal would be a user that is a founder of a prominent “h8 org” as Facebook refers to them, a medium signal would include using a logo or symbol from a banned hate group or repeatedly using dehumanizing language towards certain groups.

>they evaluate “whether an individual or group should be designated as a hate figure or organization based on a number of different signals, such as whether they carried out or have called for violence against people based on race, religion or other protected categories.”

And here is the realationship with the Anti-Defamation League

>Facebook says that they do not classify every organization listed as a hate group by the Anti Defamation League as a hate group on their platform, but states that: “Online extremism can only be tackled with strong partnerships which is why we continue to work closely with academics and organisations, including the Anti-Defamation League, to further develop and refine this process.”

>”Our policies against organized hate groups and individuals are longstanding and explicit — we don’t allow these groups to maintain a presence on Facebook because we don’t want to be a platform for hate. Using a combination of technology and people we work aggressively to root out extremist content and hate organizations from our platform.”

http://www.breitbart.com/tech/2018/05/25/leaked-documents-show-facebooks-internal-turmoil-about-hate-speech/

/mlpol/ - My Little Politics

Archived thread

>>149147

This is part of the "Silent Weapons for Quiet Wars" form of double-think that was based off the "protocols" of some pig-fucking kikes. Wait a couple weeks to see what the shekelbergs at Apple have cooked up, it is pure hasbara triple-think.

This is part of the "Silent Weapons for Quiet Wars" form of double-think that was based off the "protocols" of some pig-fucking kikes. Wait a couple weeks to see what the shekelbergs at Apple have cooked up, it is pure hasbara triple-think.

Very interesting. Wouldn't expect this.

I've encountered only a handful of genuine white "supremacists" and they were simply spergs. The existence of such a distinction exists because FB can claim to allow "open political discussion" while underhandedly zucc'ing white nationalists who are called "supremacists" by leftists. And, of course, if your organization ever once shared an edgy meme you can bet your toes you'll be called a "h8 org."

>>149147

Nice that they say they allow white nationalism. Obviously we should take advantage of this and build white nationalist groups in these theatres of operation.

Nice that they say they allow white nationalism. Obviously we should take advantage of this and build white nationalist groups in these theatres of operation.

8 replies | 6 files | 1 UUIDs | Archived

Ex: Type :littlepip: to add Littlepip

Ex: Type :littlepip: to add Littlepip  Ex: Type :eqg-rarity: to add EqG Rarity

Ex: Type :eqg-rarity: to add EqG Rarity